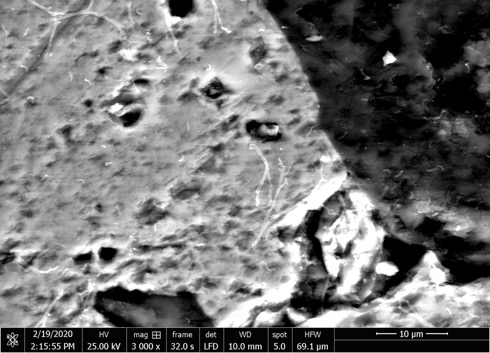

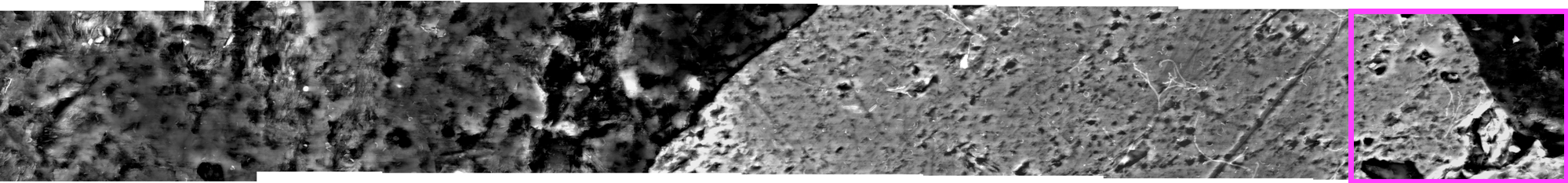

I wanted really high resolution, so I did this using 7 images at 3000X magnification and stitched them together with photomerge. Here's an individual image:

And its position in the photomerged image:

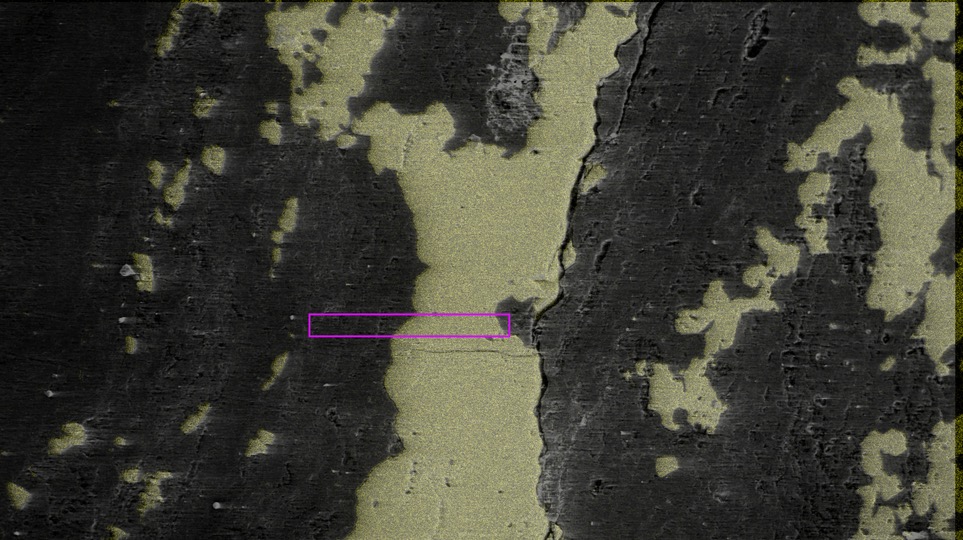

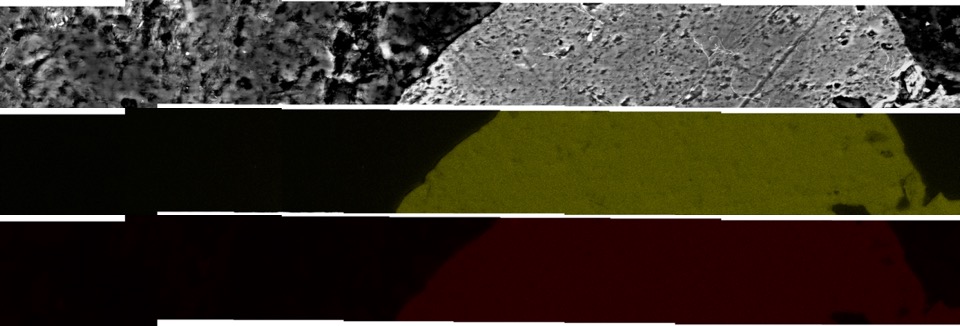

The problem is that the element maps don't have enough features in them to be able to stitch them together with photomerge. It's basically binary - if an element is detected, the pixel is some color (like red for iron or yellow for sulfur in my example images), or black if the element is not detected. You can see there's large portions of the element maps that are mostly black.

I now have ~20 transects x 7 images each x ~10 elements which results in ~1400 images that need to be put together, hence the need for automation.

My idea was to stitch together the rock images with photomerge. The output of photomerge is a smart object where each image is a layer. Then, I would use a script to get the top left corner coordinates, the width, and height for each of the 7 images in the photomerged image object. I would then place and assign these properties to each of the corresponding element maps for the 7 images to generate the "merged" element maps to overlay on the image. I tried to work on this myself but I'm not proficient in javascript and couldn't wrap my head around the Photoshop API.

I uploaded an example dataset on Github here: https://github.com/CaitlinCasar/dataSti ... le_dataset

The 7 transect positions are from left to right: -2, -1, 0, 1, 2, 3, 4. There are images of the rock and subdirectories with element data for each position. This question was also posted on Stack Overflow here:

https://stackoverflow.com/questions/606 ... 5_60675149

If anyone could help, I will acknowledge them in the publication that comes out of this work!